Proxy with Python Requests – 5 Simple Steps

Proxy with python requests are the process of integrating proxy with each python request so that the user can stay anonymous in the network. Client devices that request data from the server will send a request using a python script or configuring a proxy with python requests. Day by day, data generation is growing at

Proxy with python requests are the process of integrating proxy with each python request so that the user can stay anonymous in the network. Client devices that request data from the server will send a request using a python script or configuring a proxy with python requests.

Day by day, data generation is growing at an exponential rate. From casual web users to professional marketers and web scrapers, everyone will access data for analysing and devising strategies. The internet is a medium overflowing with data, making it convenient for users to gather information from websites. Programming developers will send requests to web pages from their code and use the data gathered from the URL as their input for their programming requirements. What if users cannot gather data from blocked sites? This article is about to discuss the usage of proxies with python requests and how it helps web scrapers.

Feel free to jump to any section to learn more about proxy with python requests!

Table of Contents

- What Is a Proxy?

- What Are Python Requests?

- Why Use Proxy with Python Requests?

- The Installation of Python and Request Libraries

- Using Proxies with Python Requests Proxy AuthenticationHTTP SessionTimeout with Proxy RequestsEnvironmental Variable

- Proxy Authentication

- HTTP Session

- Timeout with Proxy Requests

- Environmental Variable

- Functions in Request Module

- Post JSON Using the Python Request library

- How to Rotate Proxy with Python Requests

- Frequently Asked Questions

- Conclusion

What Is a Proxy?

A proxy is an intermediary device for client-server communication. These proxies work on behalf of the node in the communication and hide their identity from the other nodes in the network. Proxies have special features that ensure speed, anonymity, and uninterrupted data scraping services with zero restrictions. Gathering information from multiple sources is quite an easy process with proxy servers.

What Are Python Requests?

A python request is an HTTP library that allows users to send HTTP requests to the URL. These request libraries are not the in-built modules of python. Users can import requests from the library if needed. The HTTP request library has many methods, such as POST, PUT, HEAD, GET, POST, and PATCH.

Why Use Proxy with Python Requests?

People prefer using proxies nowadays to keep their identities under wraps. Proxies can hide our IP addresses and appear in the network with a proxy address of any type and location. This allows the user to scrape information even from restricted or geo-blocked sites. The sites that are blocked for Canadians can use a proxy address from the United Kingdom to access the sites and avoid IP bans. To make use of the features of the proxies, the web developers use proxies with the python request library so that the URL will not know the actual identity of the user.

The Installation of Python and Request Libraries

Integrating proxy with the python requests library requires the ability to work with Python.

- Basic knowledge of python programming.

- Experience in using Python 3.

- A pre-installed Python IDLE in the system.

- An import request library from the command prompt.

People should make sure they have these prerequisites. The first two are the skills needed to work on a python script, while the next two are the basic requirements to run python programs. If the system does not have a python editor, download the suitable python version that is compatible with your system configuration. Check out the instructions to download and configure Python in your system. This will require 2GB to 4GB RAM. Once the basic python installation is done, users should also make sure the necessary libraries are imported. To work with python-requests, we do not have any in-built request libraries. So, users have to install the requests library in the first place.

- Open the “Command Prompt“.

- Type “pip freeze.”

- This freeze option will display all the installed libraries of python.

- Check whether the “request module” is available in the list If not, install the “request library.”

pip install requests

- This statement will install the “request library.”

Using Proxies with Python Requests

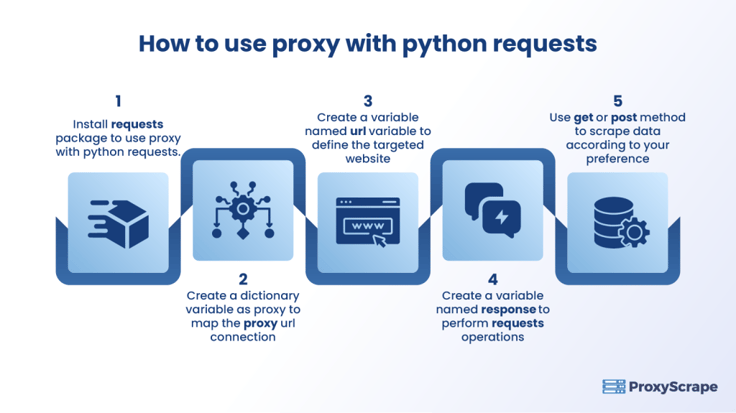

There are 5 simple steps to add proxies with python requests. These steps include the key points from installing the necessary packages, adding proxy addresses and sending requests through Requests methods of the Python modules.

Proxy With Python Requests

These 5 steps are discussed in detail through a stepwise guide. Adding proxy with Python requests and the available parameters and their functionalities are discussed below.

The primary necessity of working with python requests is importing the requests module.

import requests

This requests module is responsible for sending HTTP requests using python coding. Now to include proxies with those python-requests, users have to define a dictionary named ‘proxies’. This dictionary contains the ‘HTTP protocols’ as names and the ‘proxy URLs’ as the value of the names. This proxy dictionary helps to establish an HTTP connection.

proxies = {'https': https://proxyscrape.com/, 'http://webproxy.to/ ''}

The next step is to create a variable named ‘URL’ to define the website that is the source of the scraping process.

url = 'http://Yellowpages.com'

The next step is to define a ‘response’ variable to handle the request by passing the ‘URL’ and proxy variables.

response = requests.get(url)

Users can also print the ‘status code’ to see whether the request is successful or not.

print(f’Status Code: {response.status_code}')

Sample coding

import requests

proxies = {'https': 'https://proxyscrape.com/', 'http': 'https:/webproxy.to/'}

url = 'http://Yellowpages.com'

response = requests.get(url)

print(f'Status Code: {response.status_code}')Proxy Authentication

Users can include proxy authentication by adding ‘username’ and ‘password’ parameters.

response = requests.get(url, auth=(‘user’, ‘pass’))

Sample Coding

import requests

proxies = {'https': 'https://proxyscrape.com/', 'http': 'https:/webproxy.to/'}

url = 'http://Yellowpages.com'

response = requests.get(url, auth=('user','pass'))HTTP Session

This session object is similar to cookies. It saves the user-specific data of multiple requests. Users can include session objects by calling the session function of the request library.

requests.session()

Sample Coding

import requests

session = requests.Session()

session.proxies = {'https': 'https://proxyscrape.com/', 'http': 'https:/webproxy.to/'}

url = 'http://Yellowpages.com'

response = requests.get(url)

print(f’Status Code: {response.status_code}') Timeout with Proxy Requests

The “timeout” parameter of HTTP requests allows users to specify a maximum time limit to process requests. This time tells the server how long it should wait for a response. People can pass this parameter to the HTTP requests function.

response = requests.get('url’, timeout=5))

Users can also assign the timeout value to “None” if the remote server is slow and the system has to wait for a long time.

response = requests.get('url’, timeout=none))

Sample Code:

import requests

proxies = {'https': 'https://proxyscrape.com/', 'http': 'https:/webproxy.to/'}

url = 'http://Yellowpages.com'

response = requests.get(url, timeout=5)

print(f’Status Code: {response.status_code}') Environmental Variable

People may use the same proxy numerous times. Instead of typing the proxy URL repeatedly, they have the option of an environmental variable. With this option, people can assign a proxy URL to an environmental variable and just use that variable whenever needed.

export HTTP_PROXY=’http://webproxy.t’

Functions in Request Module

The request library of the python language is capable of handling multiple functions related to requests and responses, like get, post, put, delete, patch, and head. Here is the syntax of the popular functions.

- response = requests.get(url)

- response = requests.post(url, proxies = proxies)

- response = requests.head(url)

- response = requests.options(url)

- response = requests.put(url,data={“a”:1,args}

- response = requests.delete(url)

- response = requests.patch(url,data={“a”:1,args}

Post JSON Using the Python Request library

Posting a JSON to the server is also possible in python-requests. In this case, the proxy with python requests methods takes the URL as its first parameter and the JSON as its second parameter. It converts the dictionary into python strings.

Sample Code:

import requests

proxies = {'https': 'https://proxyscrape.com/', 'http': 'https:/webproxy.to/'}

url = 'http://Yellowpages.com'

response = requests.post(url, json={

"ID": 123,

"Name": "John"))

})

print(f’Status Code: {response.status_code}, Response: {r.json()}") How to Rotate Proxy with Python Requests

People can also rotate proxies to improve anonymity. Using the same proxy for a long time for all sites can help Internet Service Providers track and ban your proxy address. People prefer using more than one proxy server in a rotational model to deal with these IP bans. They have a pool of proxies, and the system rotates and assigns a new proxy from the pool.

The first step to rotating proxy with python requests is to import the necessary libraries like requests, Beautiful soap, and choice.

To use this rotational proxy with python-requests, we have to configure them or their URLs to make use of them. Proxyscrape provides paid and free proxies of all categories. People can use residential, data-centred, and private proxies of all types and locations.

ipaddresses = [“ proxyscrape.com:2000”, “proxyscrape.com:2010 ”, “proxyscrape.com:2100 ”, “proxyscrape.com 2500”]

Then users have to create a ‘proxy request’ method that has three parameters, such as to request type, URL and **kwargs.

def proxy_request(get_proxy,http://webproxy.to/,**kwargs):

Within this ‘proxy request’ method, return the proxy dictionary as a response for the proxy request methods. Here, kwargs is an argument to pass the values.

This method extracts proxy addresses from a specific URL to convert the extracted response into a Beautiful Soap object that eases the proxy extraction process.

random.radint(0,len(ipaddresses)-1)

Then create a ‘proxy’ variable that uses the ‘soap’ library to randomly generate a proxy address from a list of ‘soap’ object proxies.

proxy = random.radint(0,len(ipaddresses)-1)

proxies = {“http” : ipaddresses(proxy), “https” : ipaddresses(proxy)}

response = requests.get(getproxy, url, proxies = proxies, timeout=5, **kwargs)

print(currentproxy:{proxy[‘https’]}”)

Sample code

import requests

import BeautifulSoap

import choice

ipaddresses = [“ proxyscrape.com:2000”, “proxyscrape.com:2010 ”, “proxyscrape.com:2100 ”, “proxyscrape.com 2500”]

def proxy_request(get_proxy,http://webproxy.to/,**kwargs):

while True:

proxy = random.radint(0,len(ipaddresses)-1)

proxies = {“http” : ipaddresses(proxy), “https” : ipaddresses(proxy)}

response = requests.get(getproxy, url, proxies = proxies, timeout=5, **kwargs)

print(currentproxy:{proxy[‘https’]}”)

break

return responseSuggested Reads:

The Top 8 Best Python Web Scraping Tools in 2023How To Create A Proxy In Python? The Best Way in 2023

Frequently Asked Questions

FAQs:

1. What is a Proxy with python requests?

2. Why use proxy with python requests?

3. What is proxy authentication?

Conclusion

This article covered HTTP requests in a python programming language, along with the necessary libraries, modules, and functions involved in sending an HTTP request. You can import the request module and utilize the GET, POST, PATCH, and PUT methods per your requirements. You can focus on the proxy in python if you wish to make use of proxy features like anonymity, speed, and scraping capabilities. Users can also use proxy pools and rotationally assign proxies with a proxy request to enhance security.