Web Scraping for Data Science

Organizations currently extract enormous volumes of data for analysis, processing, and advanced analysis to identify patterns from those data so stakeholders can draw informed conclusions. As the Data Science field is growing rapidly and has revolutionized so many industries, it is worth getting to know how organizations extract these tons of data. Up to date

Organizations currently extract enormous volumes of data for analysis, processing, and advanced analysis to identify patterns from those data so stakeholders can draw informed conclusions. As the Data Science field is growing rapidly and has revolutionized so many industries, it is worth getting to know how organizations extract these tons of data.

Up to date, the data science field has looked towards the web to scrape large quantities of data for their needs. So in this article, we would focus on web scraping for data science.

What is Web Scraping in Data Science?

Web Scraping, also known as web harvesting or screen scraping, or web data extraction, is the way of extracting large quantities of data from the web. In Data Science, the accuracy of its standard depends on the amount of data you have. More prominently, the data set would ease the training model as you would test various aspects of the data.

Regardless of the scale of your business, data about your market and analytics are essential to your company for you to stay ahead of your competitors. Every tiny decision to enhance your business is driven by data.

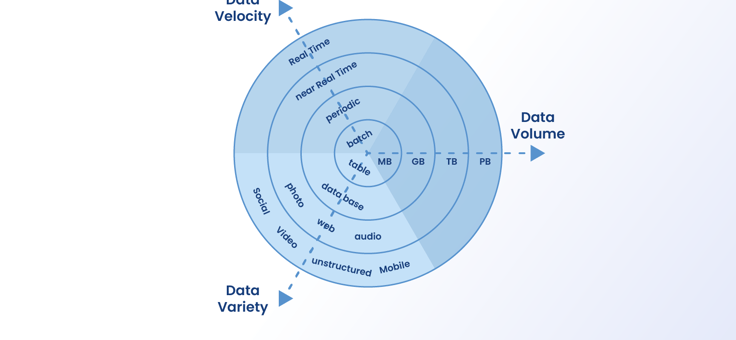

After you scrape data from diverse sources on the web, it would enable you to analyze them immediately, aka real-time analysis. However, there are instances where the delayed analysis would serve no purpose. One of the typical examples of a situation requiring real-time analysis would be the stock price data analysis and CRM (Customer Relationship Management).

Why is scraping important for Data science?

The web contains a plethora of data on any given topic, ranging from complex data regarding how to launch a space mission to personal data such as your post on Instagram about what you ate, for instance. All such raw data are of immense value to data scientists who can analyze and draw conclusions on the data by extracting valuable insights from them.

There are a handful of open-source data and websites providing specialized data that data scientists require. Usually, people can visit such sites once to extract data manually, which would be time-consuming. Alternatively, you can also query the data, and the server will fetch the data from the server.

However, the data that you require for data science or machine learning is quite massive, and a single website is not reasonably sufficient to cater to such needs. This is where you need to turn to web scraping, your ultimate savior.

Data Science involves implementing sophisticated tasks such as NLP (Natural Language Processing), Image recognition, etc., together with AI (Artificial Intelligence), which are of immense benefits to our daily needs. In such circumstances, web scraping is the most frequently used tool that automatically downloads, parses, and organizes data from the web.

In this article, we will focus on several web scraping scenarios for data science.

Best practices before you scrape for Data Science

It is vital to check with the website that you plan to scrape whether it allows scraping by outside entities. So here are specific steps that you must follow before you scrape:

Robot.txt file-You must check the robot.txt file on how you or your bot should interact with the website as it specifies a set of rules for doing so. In other words, it determines which pages of a website that you are allowed and not allowed to access.

You can easily navigate to by typing website_url/robot.txt as it is located on a website’s root folder.

Terms of Use–Make sure that you look into the target website’s terms of use. For example, if it is mentioned in the provisions for use that the website does not limit access to bots and spiders and does not prohibit rapid requests to the server, you would be able to scrape.

Copyrights-After extracting data, you need to be careful about where you intend to use them. This is because you need to make sure that you do not violate copyright laws. If the terms of use do not provide a limitation on a particular use of data, then you would be able to scrape without any harm.

Different use cases of Web Scraping for Data Science

Real-time Analytics

The majority of web scraping projects need to have real-time data analytics. When we say Real-time data, they are the data that you can present as they are collected. In other words, these types of data are not stored but directly passed to the end-user.

Real-time analytics is entirely different from batch-style analytics because the latter takes hours or considerable delays to process data and produce valuable insights.

Some of the examples of real-time data are e-commerce purchases, weather events, log files, geo locations of people or places and, server activity, to name a few examples.

So let’s dive into some use cases of real-time analytics:

- Financial institutions use real-time analytics for credit scoring to decide whether to renew the credit card or to discontinue it.

- CRM (Customer Relationship Management) is another standard software where you can use real-time analytics for optimizing customer satisfaction and improving business outcomes.

- Real-time analytics also is used in Point of Sale terminals to detect fraud. In retail outlets, real-time analytics plays a handy role in dealing with the incentives of customers.

So now the question is, how do you scrape real-time data for analytics?

Since all of the above use cases indicate that real-time analytics depend on processing large quantities of data, this is where web scraping comes into play. Real-time analytics can’t take place if the data is not accessed, analyzed, and extracted instantly.

As a result, a scraper with low latency will be used to scrape quickly from target websites. These scrapers scrape data by extracting data at very high frequencies equivalent to the speed of the website. As a result, they would provide at least near real-time data for analytics.

Natural Language Processing

Natural Language Processing (NLP) is when you provide the input data about natural languages such as English as opposed to programming languages such as Python to computers in order for them to understand and process them. Natural Language processing is a broad and complicated field as it is not easy to locate what particular words or phrases mean.

One of the most common use cases of NLP is data scientists using comments on social media by customers on a particular brand to process and assess how a specific brand is performing.

Since the web constitutes dynamic resources such as blogs, press releases, forums, and customer reviews, they can be extracted to form a vast text corpora of data for Natural Language Processing.

Predictive modeling

Predictive modeling is all about analyzing data and using probability theory to calculate the predictive outcomes for future scenarios. However, predictive analysis is not about a precise forecast of the future. Instead is all about forecasting the probabilities of it happening.

Every model has predictive variables that can impact future results. You can extract the data that you need for vital predictions from websites through web scraping.

Some of the use cases of predictive analysis are:

- For example, you can use it to identify customer behavior commonly and products to workout risks and opportunities.

- You can also use it to identify specific patterns in data and predict certain outcomes and trends.

The success of predictive analysis largely depends on the presence of vast volumes of existing data. You can formulate an analytical once you complete the data processing.

Preparing for machine learning models

Machine Learning is the concept that allows machines to learn on their own after you feed them with training data. Of course, training data would vary according to each specific use case. But you could once again turn to the web for extracting training data for various machine learning models with different use cases. Then, when you have training data sets, you can teach them to do correlated tasks such as clustering, classification, and attribution.

It is of utmost importance to scrape data from high-quality web sources because the machine learning model’s performance will depend on the quality of the training data set.

How proxies can help you with web scraping

The purpose of a proxy is to mask your IP address when you scrape from a target website. Since you need to scrape from multiple web sources, it will be ideal to use a proxy pool that would be rotating. It is also most likely that such websites impose the maximum number of times you could connect them.

In that regard, you need to rotate IP addresses using different proxies. To find out more about the proxies, please refer to our latest blog articles.

Conclusion

By now, you have a fair idea about the types of data that you need to scrape for Data Science. The field of data science is indeed a complicated field and one that requires extensive knowledge and experience. As a data scientist, you also need to grasp the various ways in which web scraping is performed.

We hope that this article provided some fundamental understanding of scraping for data science, and it will be of some immense value to you.